A noisy elephant in the room:

Is your out-of-distribution detector robust to label noise?

CVPR'24

Galadrielle Humblot-Renaux, Sergio Escalera, and Thomas B. Moeslund

Visual Analysis and Perception lab, Aalborg University, Denmark

Department of Mathematics and Informatics, University of Barcelona, Spain

Computer Vision Center, Spain

📌 Abstract

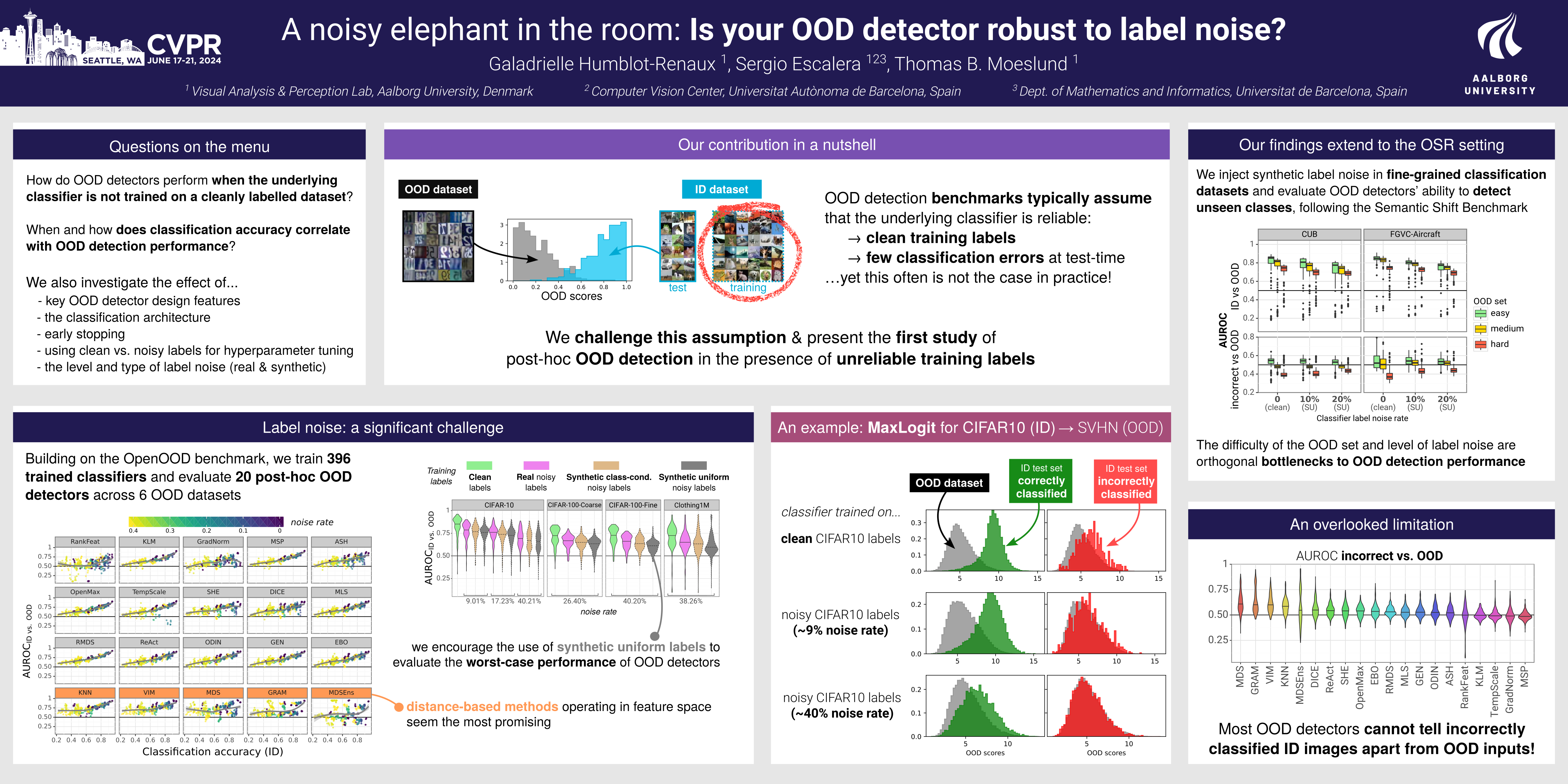

The ability to detect unfamiliar or unexpected images is essential for safe deployment of computer vision systems. In the context of classification, the task of detecting images outside of a model's training domain is known as out-of-distribution (OOD) detection. While there has been a growing research interest in developing post-hoc OOD detection methods, there has been comparably little discussion around how these methods perform when the underlying classifier is not trained on a clean, carefully curated dataset.In this work, we take a closer look at 20 state-of-the-art OOD detection methods in the (more realistic) scenario where the labels used to train the underlying classifier are unreliable (e.g. crowd-sourced or web-scraped labels). Extensive experiments across different datasets, noise types & levels, architectures and checkpointing strategies provide insights into the effect of class label noise on OOD detection, and show that poor separation between incorrectly classified ID samples vs. OOD samples is an overlooked yet important limitation of existing methods.

🔗 BibTeX

@inproceedings{Humblot-Renaux_2024_CVPR,

title={A noisy elephant in the room: Is your out-of-distribution detector robust to label noise?},

author={Humblot-Renaux, Galadrielle and Escalera, Sergio and Moeslund, Thomas B.},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2024},

}